40 confident learning estimating uncertainty in dataset labels

Confident Learning: Estimating Uncertainty in Dataset Labels - ReadkonG Page topic: "Confident Learning: Estimating Uncertainty in Dataset Labels - arXiv.org". Created by: Marcus Perez. Language: english. An Introduction to Confident Learning: Finding and Learning with Label ... An Introduction to Confident Learning: Finding and Learning with Label Errors in Datasets Curtis Northcutt Mod Justin Stuck • 3 years ago Hi Thanks for the questions. Yes, multi-label is supported, but is alpha (use at your own risk). You can set `multi-label=True` in the `get_noise_indices ()` function and other functions.

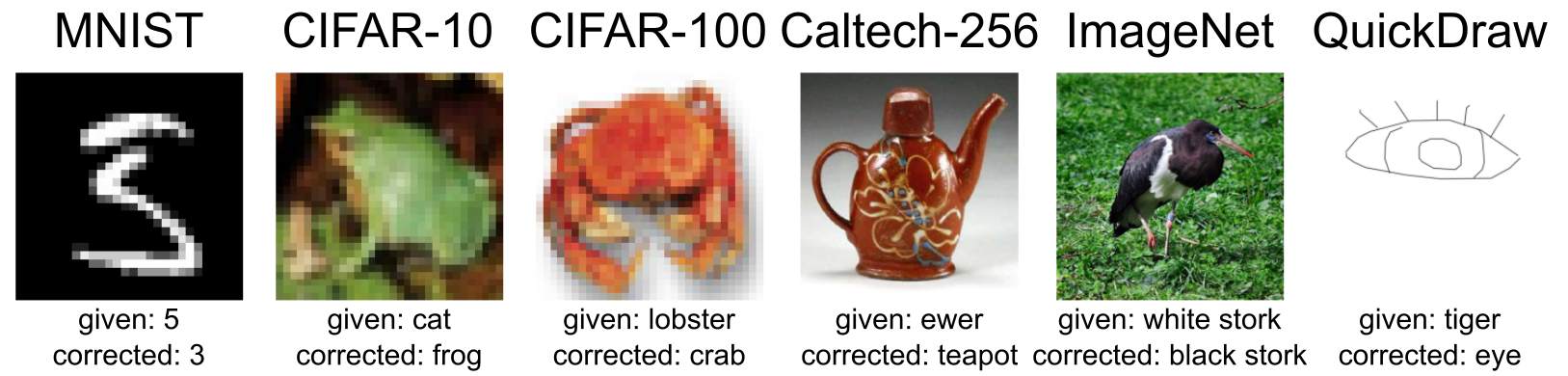

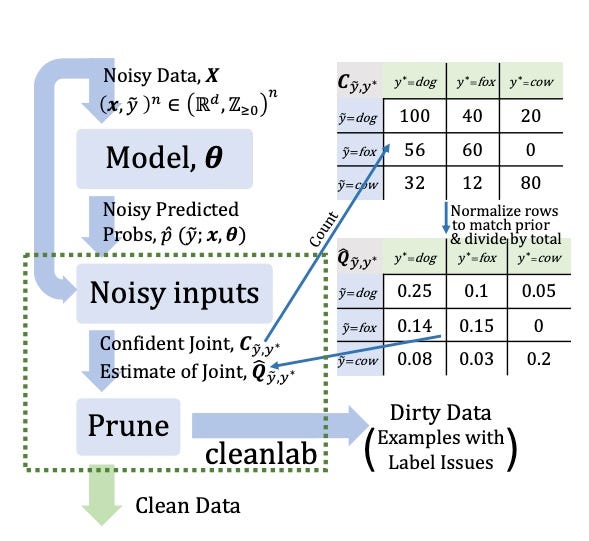

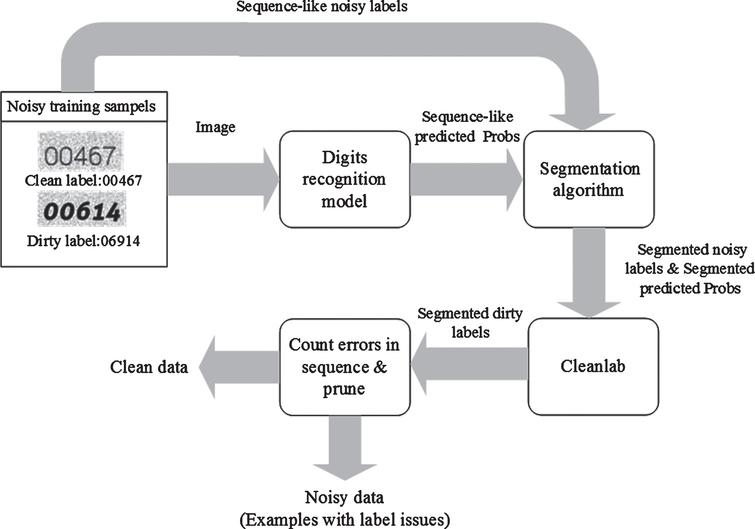

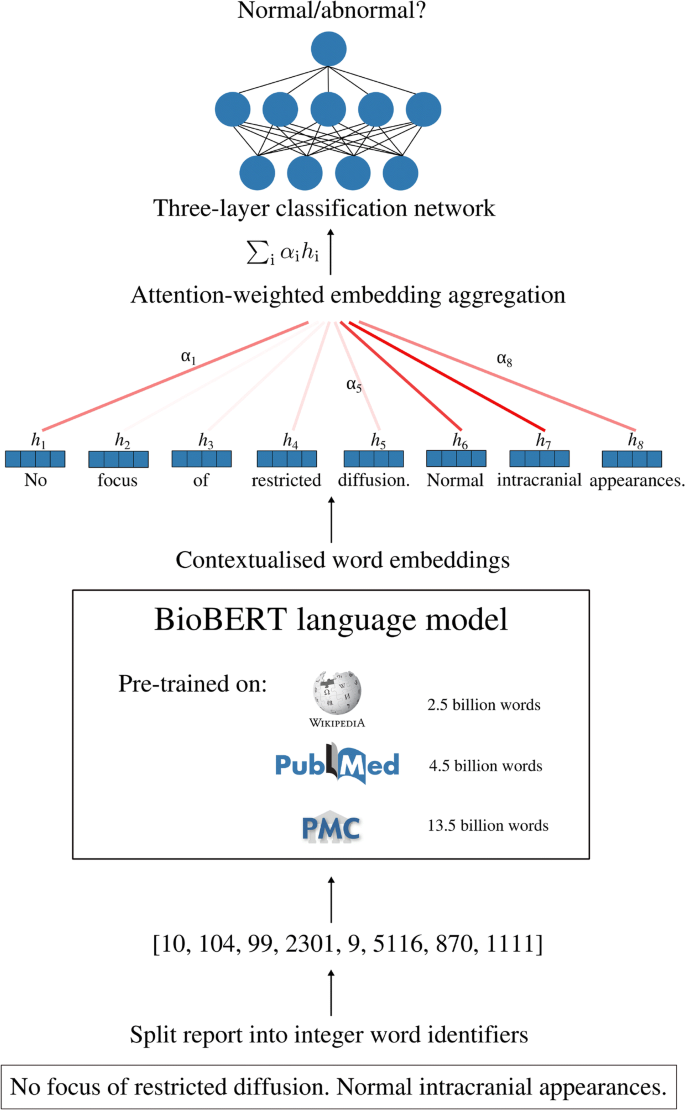

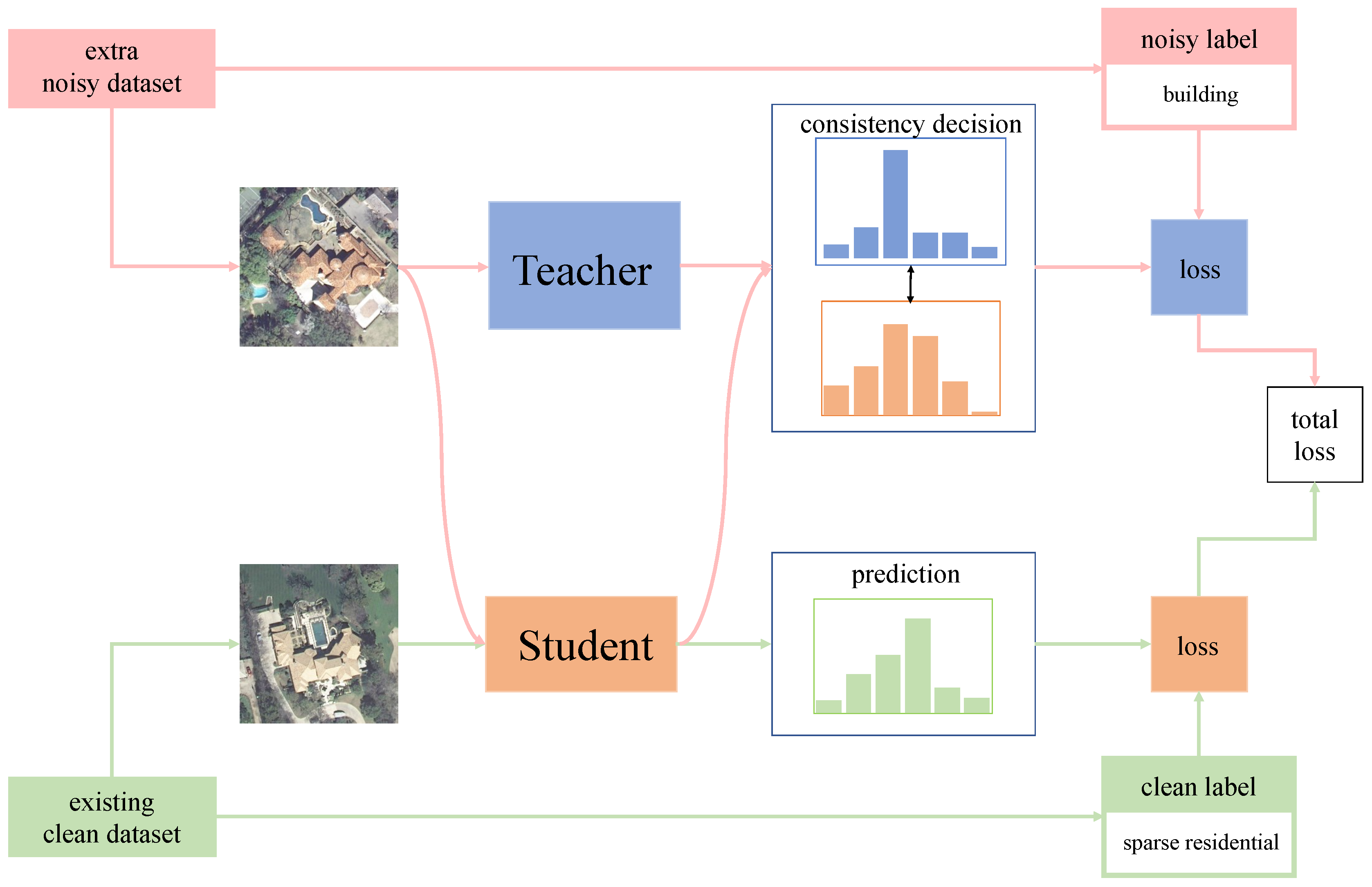

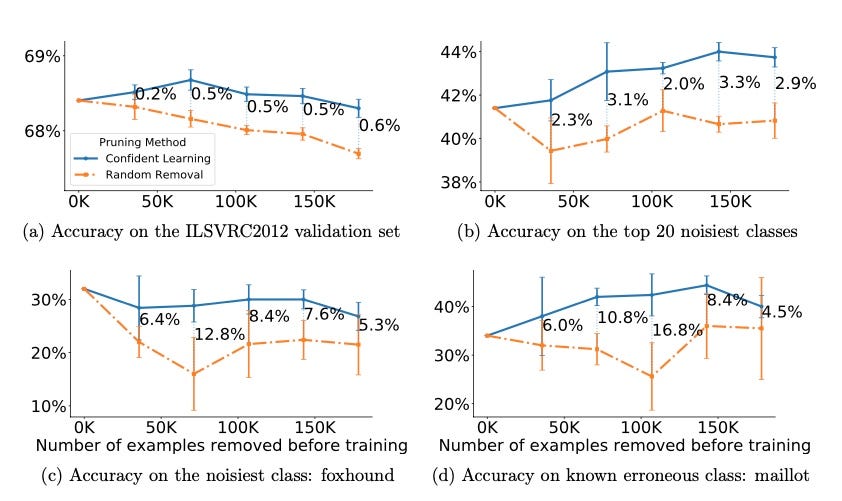

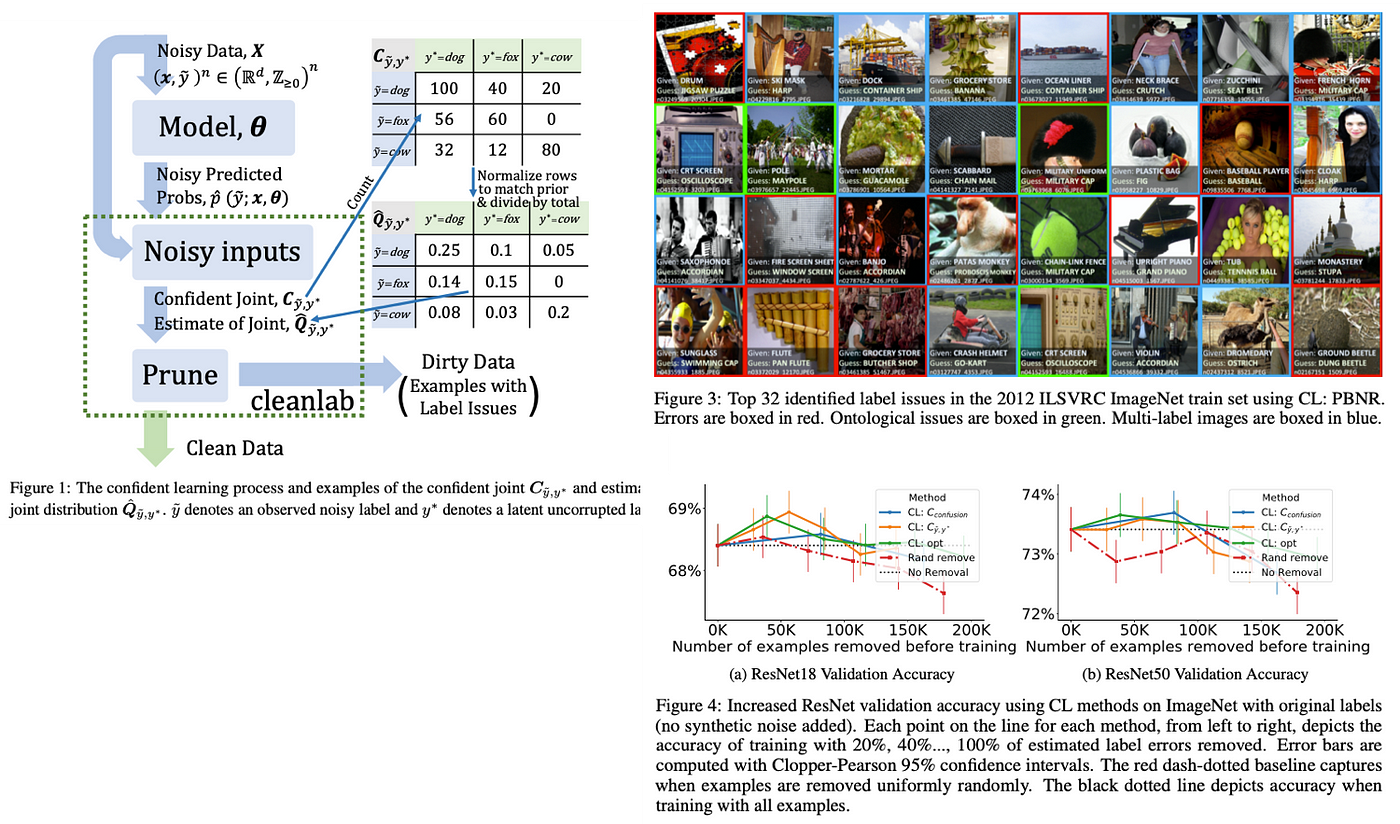

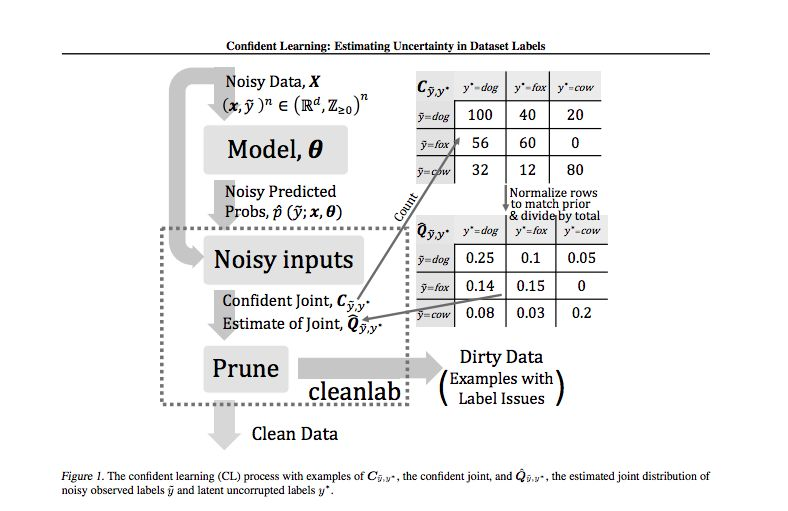

Confident Learning: Estimating Uncertainty in Dataset Labels Confident learning (CL) has emerged as an approach for character- izing, identifying, and learning with noisy labels in datasets, based on the principles of pruning noisy data, counting to estimate noise, and rank- ing examples to train with confidence. Here, we generalize CL, building on the assumption of a

Confident learning estimating uncertainty in dataset labels

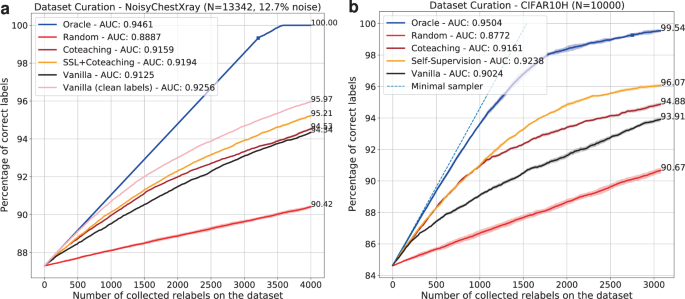

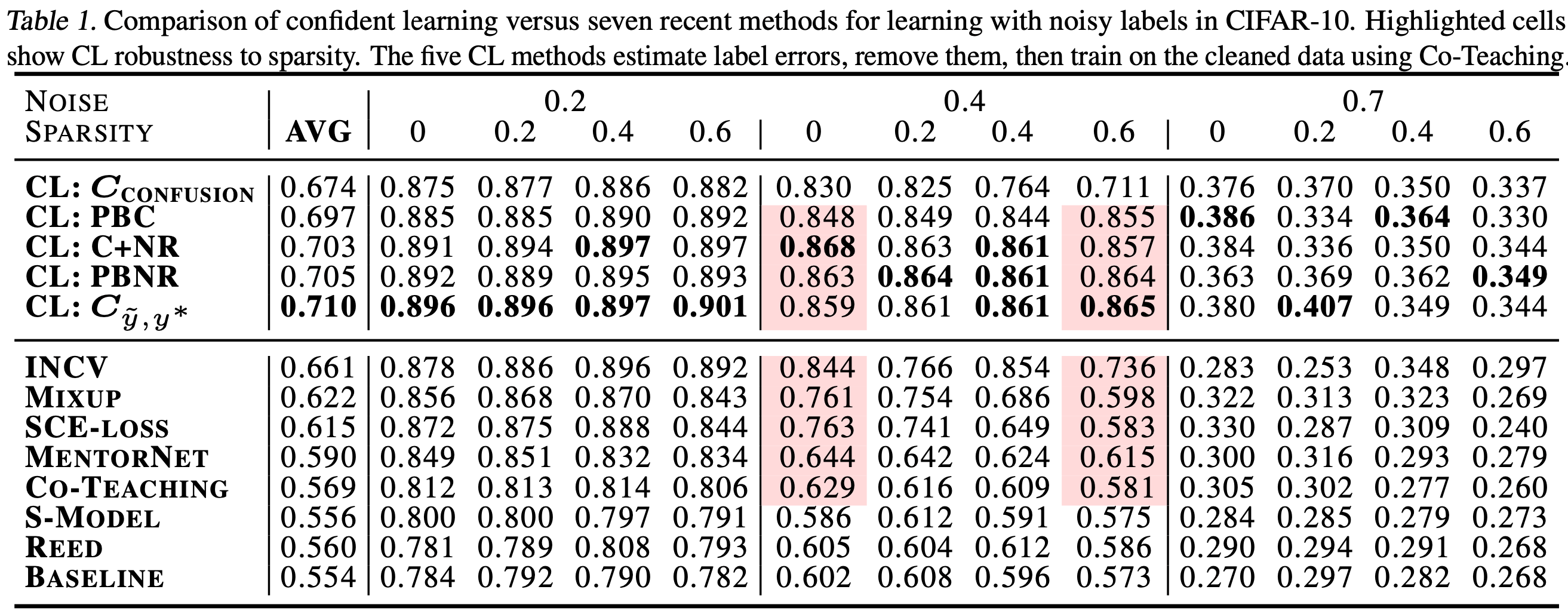

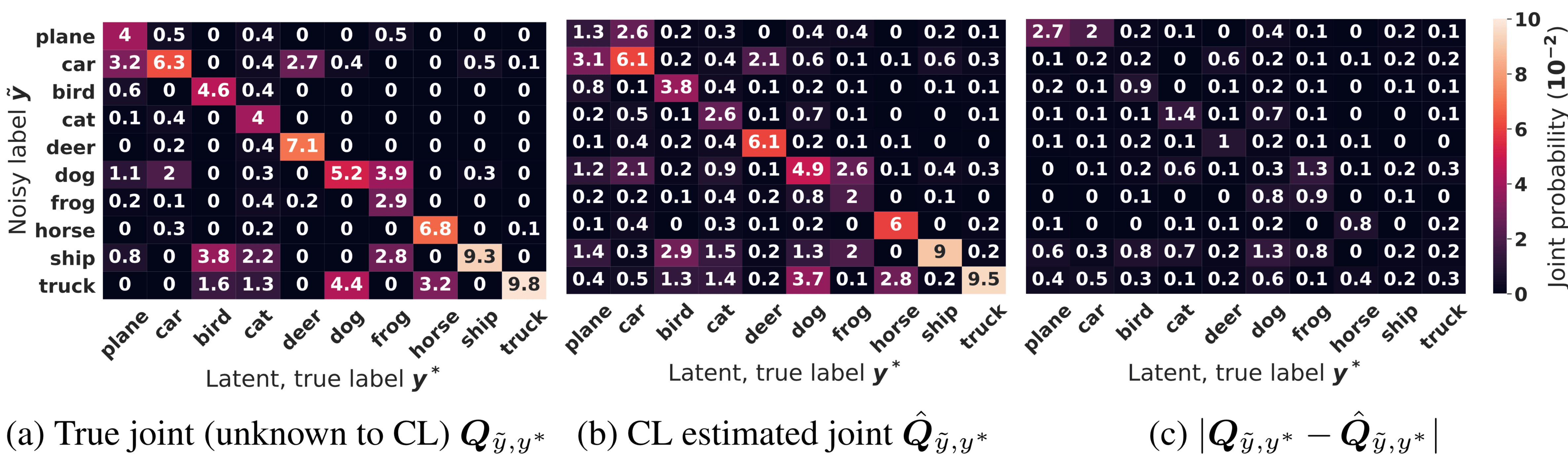

Confident Learning: Estimating Uncertainty in Dataset Labels - Researchain Confident learning (CL) is an alternative approach which focuses instead on label quality by characterizing and identifying label errors in datasets, based on the principles of pruning noisy data, counting with probabilistic thresholds to estimate noise, and ranking examples to train with confidence. Confident Learning: Estimating Uncertainty in Dataset Labels Figure 5: Absolute difference of the true joint Qỹ,y∗ and the joint distribution estimated using confident learning Q̂ỹ,y∗ on CIFAR-10, for 20%, 40%, and 70% label noise, 20%, 40%, and 60% sparsity, for all pairs of classes in the joint distribution of label noise. - "Confident Learning: Estimating Uncertainty in Dataset Labels" Confident Learning: Estimating Uncertainty in Dataset Labels Confident learning (CL) is an alternative approach which focuses instead on label quality by characterizing and identifying label errors in datasets, based on the principles of pruning noisy data,...

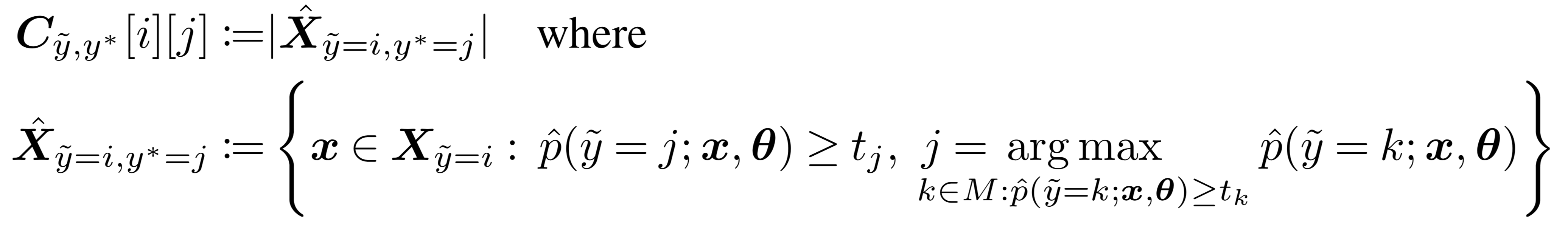

Confident learning estimating uncertainty in dataset labels. Confident Learning: : Estimating ... Confident Learning: Estimating Uncertainty in Dataset Labels t j= 1 jX ~y=jj X x2X ~y=j p^(~y=j;x; ) (2) Unlikepriorart ... Confident Learning: Estimating Uncertainty in Dataset Labels Learning exists in the context of data, yet notions of \\emph{confidence} typically focus on model predictions, not label quality. Confident learning (CL) is an alternative approach which focuses instead on label quality by characterizing and identifying label errors in datasets, based on the principles of pruning noisy data, counting with probabilistic thresholds to estimate noise, and ... Confident Learning: Estimating Uncertainty in Dataset Labels Confident learning (CL) has emerged as an approach for characterizing, identifying, and learning with noisy labels in datasets, based on the principles of pruning noisy data, counting to estimate noise, and ranking examples to train with confidence. Title: Confident Learning: Estimating Uncertainty in Dataset Labels Confident Learning: Estimating Uncertainty in Dataset Labels Curtis G. Northcutt, Lu Jiang, Isaac L. Chuang (Submitted on 31 Oct 2019 ( v1 ), revised 15 Feb 2021 (this version, v4), latest version 8 Apr 2021 ( v5 )) Learning exists in the context of data, yet notions of \emph {confidence} typically focus on model predictions, not label quality.

(PDF) Confident Learning: Estimating Uncertainty in Dataset Labels Confident learning (CL) has emerged as an approach for characterizing, identifying, and learning with noisy labels in datasets, based on the principles of pruning noisy data, counting to estimate... Confident Learning: Estimating Uncertainty in Dataset Labels Confident learning (CL) is an alternative approach which focuses instead on label quality by characterizing and identifying label errors in datasets, based on the principles of pruning noisy data, counting with probabilistic thresholds to estimate noise, and ranking examples to train with confidence. Confident Learning: Estimating Uncertainty in Dataset Labels Learning exists in the context of data, yet notions of $\textit{confidence}$ typically focus on model predictions, not label quality. Confident learning (CL) has emerged as an approach for characterizing, identifying, and learning with noisy labels in datasets, based on the principles of pruning noisy data, counting to estimate noise, and ranking examples to train with confidence. Confident Learning: Estimating Uncertainty in Dataset Labels Confident learning (CL) is an alternative approach which focuses instead on label quality by characterizing and identifying label errors in datasets, based on the principles of pruning noisy data,...

Confident Learning: Estimating Uncertainty in Dataset Labels Figure 5: Absolute difference of the true joint Qỹ,y∗ and the joint distribution estimated using confident learning Q̂ỹ,y∗ on CIFAR-10, for 20%, 40%, and 70% label noise, 20%, 40%, and 60% sparsity, for all pairs of classes in the joint distribution of label noise. - "Confident Learning: Estimating Uncertainty in Dataset Labels" Confident Learning: Estimating Uncertainty in Dataset Labels - Researchain Confident learning (CL) is an alternative approach which focuses instead on label quality by characterizing and identifying label errors in datasets, based on the principles of pruning noisy data, counting with probabilistic thresholds to estimate noise, and ranking examples to train with confidence.

![R] Announcing Confident Learning: Finding and Learning with ...](https://external-preview.redd.it/JyNe3XlRAW8dLTeUjHMVD_W3Exh6KXJv0Znqhd6aE7E.jpg?auto=webp&s=c16c72d9b65f4dee5f0859b36de39811ba27404d)

![PDF] Confident Learning: Estimating Uncertainty in Dataset ...](https://d3i71xaburhd42.cloudfront.net/6482d8c757bce9b0d208d37d4b09e4805faae983/26-Figure11-1.png)

![Paper Reading]Learning with Noisy Label-深度学习廉价落地- 知乎](https://pic2.zhimg.com/v2-80277171b5896eb794b5600dc526a8e5_b.jpg)

![Active Learning in Machine Learning [Guide & Examples]](https://assets-global.website-files.com/5d7b77b063a9066d83e1209c/633a98dcd9b9793e1eebdfb6_HERO_Active%20Learning%20.png)

Post a Comment for "40 confident learning estimating uncertainty in dataset labels"